"How LRU Cache is Implemented in JavaScript: Managing Memory Efficiently in Web Applications"

Vanessa, a diligent and passionate librarian, was entrusted with the important task of managing the library's collection. With shelves brimming with books of all genres, she took great care to ensure that the library remained a haven for book lovers far and wide.

Adjacent to the library, there was a charming bookstore known as "Book Haven." It boasted shelves of literary treasures, each waiting to be discovered by eager readers. Vanessa often found herself perusing the aisles of Book Haven, carefully selecting books to enrich the library's collection.

However, there was a challenge that Vanessa faced – the library's shelves had limited space. With each new addition, Vanessa had to consider which books would occupy the spots on the shelves. She understood the importance of selecting books that people would truly enjoy reading, not just any book that happened to be available.

This is where the analogy of the Least Recently Used (LRU) cache comes into play. Vanessa had to manage the limited shelf space efficiently, much like how a computer system manages its cache memory.

The books frequently borrowed and read were akin to the most recently used items in the cache, while those that remained untouched for a long time became candidates for replacement.

Vanessa devised a clever system to manage the library's collection effectively. She kept track of the popularity of each book, noting which ones were being borrowed frequently and which ones were gathering dust on the shelves. Whenever she visited Book Haven, she would carefully select new books to replace the least popular ones in the library(p.s. the ones gathering dust on the shelves).

What is the LRU (Least Recently Used) cache?

It is a caching algorithm used in computer science and software engineering to manage the contents of a cache, such as memory or disk space.

In the LRU algorithm, when the cache is full and a new item needs to be added, the least recently used item is evicted to make room for the new item. This eviction policy is based on the assumption that items that have not been accessed recently are less likely to be reaccessed soon.

In Vanessa's story, the Least Recently Used (LRU) cache acts as a dynamic system to efficiently manage the library's collection.

Similar to how Vanessa selects and rotates books based on their popularity and recent usage, the LRU cache automatically maintains a limited number of frequently accessed books on the shelves.

By evicting the least recently borrowed books and replacing them with newer additions or more popular titles, the LRU cache optimizes shelf space and ensures that the most sought-after books remain readily available to patrons, enhancing their overall library experience.

What must the LRU(Least Recently Used) cache have?

Let's relate the characteristics of an LRU cache to Vanessa's story about managing the library's collection:

Eviction Policy: In Vanessa's story, the eviction policy of the LRU cache corresponds to her decision-making process when selecting which books to remove from the shelves to make room for new additions. Similar to how the cache evicts the least recently used item, Vanessa removes the least popular or least frequently borrowed books from the shelves.

Access Time Complexity: The access time complexity of an LRU cache in Vanessa's story reflects the efficiency with which patrons can find and borrow books from the library. With an LRU cache in place, the time it takes for patrons to locate popular or frequently borrowed books is minimized, as these books are readily available on the shelves.

Temporal Locality: Temporal locality in Vanessa's story is akin to the tendency of patrons to borrow books that have been recently accessed or are currently popular. By prioritizing the retention of recently borrowed books and popular titles, the LRU cache ensures that the library's collection remains relevant to the community's reading interests over time.

Limited/Fixed Capacity: The limited capacity of the LRU cache mirrors the finite amount of shelf space available in the library. Just as the cache can only store a certain number of items, the library can only accommodate a limited number of books on its shelves. As such, Vanessa must carefully manage the space to ensure that the most relevant and in-demand books are available to people.

Efficiency: The efficiency of the LRU cache in Vanessa's story corresponds to the effectiveness of the library's collection management practices. By employing an LRU cache-like strategy, Vanessa optimizes the use of shelf space and ensures that the library's resources are allocated efficiently to meet the community's reading needs.

Data Structures To Take Note

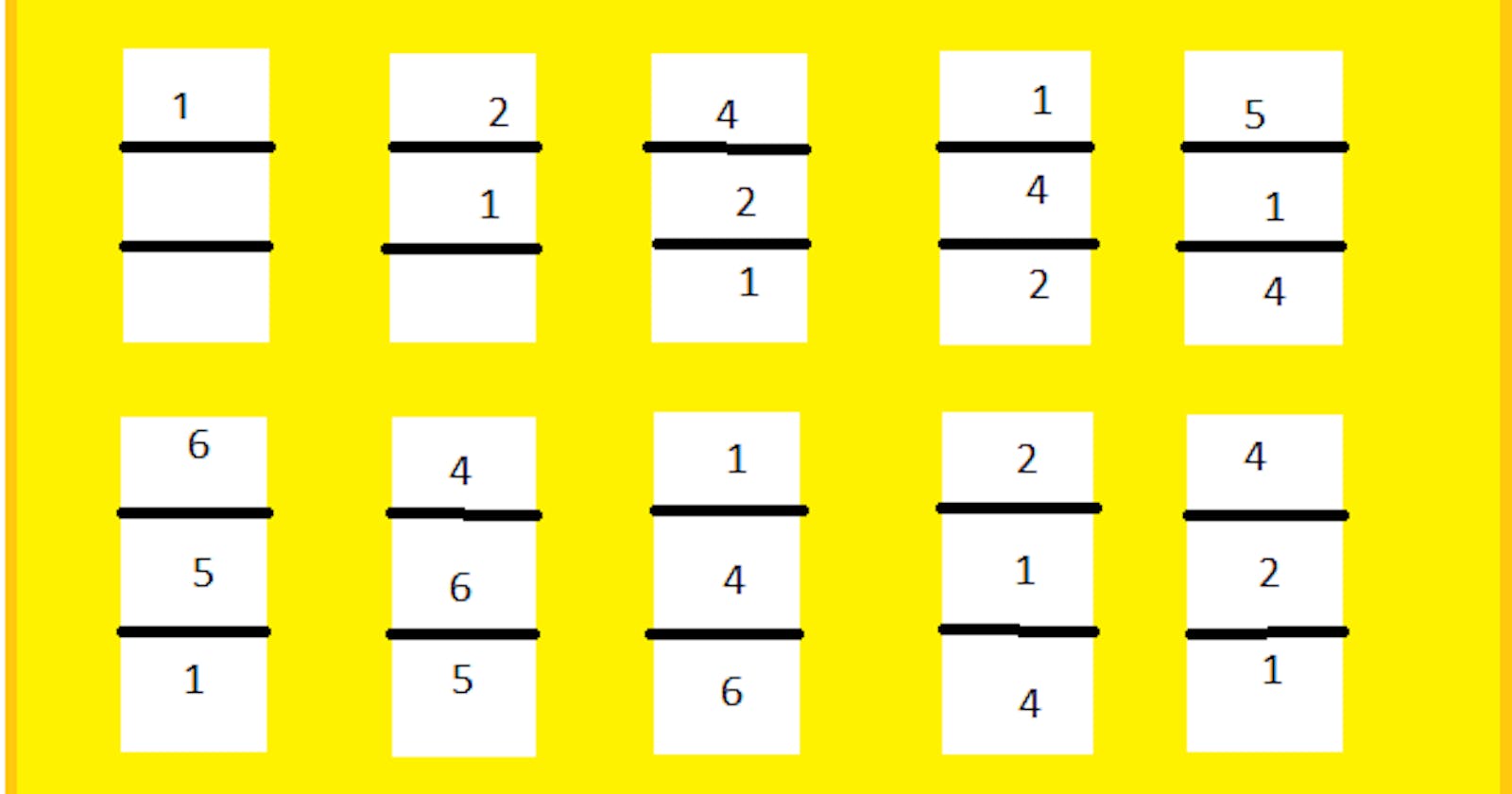

Doubly Linked List

A doubly linked list is a linear data structure where each element, known as a node, contains a data element and two pointers: one pointing to the previous node and one pointing to the next node.

In the context of an LRU cache, a doubly linked list is efficient because it allows for constant-time (O(1)) operations for both insertion and deletion at any position in the list.

This is crucial for maintaining the order of items based on their usage, as the most recently accessed items are moved to the front of the list, while the least recently accessed items are located towards the end.

Hash map

A hash map is a data structure that stores key-value pairs, allowing for fast retrieval of values based on their associated keys. It typically uses a hash function to map keys to indices in an underlying array.

a hash map is efficient because it provides constant-time (O(1)) access to items based on their keys. This allows the cache to quickly determine whether an item is present in the cache and retrieve its corresponding value.

Operations Involved in Lru(Least Recently Used) cache

Detach Operation:

This operation involves deleting an item from the cache temporarily from its current position. The item can then be added to the front of the cache or temporarily removed.

For example:

If an item in the cache needs to be updated or moved due to cache management requirements, the item can be detached from its current position in the cache's linked list structure.

This ensures that the item is temporarily removed from the cache, allowing for subsequent operations such as updating its data or reinserting it into a different position in the cache.

Get Operation:

The Get operation involves retrieving an item from the cache based on a specified key.

When a client requests a value associated with a specific key, the cache performs a lookup operation to find the corresponding item.

Prepend Operation:

Prepending an item to the cache involves adding it to the beginning or front of the cache's data structure

Update Operation:

Updating an item in the cache involves modifying its value. This operation ensures that cached data remains up-to-date and accurate, reflecting the latest state of the underlying data.

TrimCache Operation:

This means trimming the cache. Trimming the cache involves reducing its size or capacity to free up memory or resources.

This operation may be performed periodically or in response to resource constraints to ensure that the cache remains within its allocated limits

Enough of all that let's Implement this In Javascript.....

We need to create our Node which will have two pointers. A pointer to the next node and a pointer to the previous node.

<p data-line="71" class="sync-line" style="margin:0;"></p>

class Node {

constructor(val) {

this.value = val;

this.next = null;

this.prev = null;

}

}

This is how to create the Node.

<p data-line="71" class="sync-line" style="margin:0;"></p>

class LRU {

/**

* Initializes an LRU cache with a specified capacity.

* @param {number} capacity The capacity of the LRU cache.

*/

constructor(capacity) {

// Initialize instance variables

this.length = 0; // Tracks the number of items currently in the cache

this.capacity = capacity; // Maximum capacity of the cache

this.head = undefined; // Pointer to the head of the linked list

this.tail = undefined; // Pointer to the tail of the linked list

this.look_up = new Map(); // Map to store key-node pairs for quick lookup

this.reverse_look_up = new Map(); // Map to store node-key pairs for quick reverse lookup

}

/**

* Creates a new Node with the specified key.

* @param {*} key The key for the new Node.

*/

createNode(key) {

// This implement the creation the node

}

/**

* Updates the cache with the specified key.

* Moves the item to the front of the cache if it exists; otherwise, adds it to the cache.

* @param {*} key The key of the item to be updated.

*/

update(key) {

}

/**

* Retrieves the value associated with the specified key from the cache.

* Moves the accessed item to the front of the cache.

* @param {*} key The key of the item to retrieve.

* @returns {*} The value associated with the specified key, or undefined if the key is not found.

*/

get(key) {

}

/**

* Detaches(remove) the specified node from the linked list.

* @param {Node} node The node to detach from the linked list.

*/

detach(node) {

}

/**

* Prepends the specified node to the front of the linked list.

* @param {Node} node The node to prepend to the linked list.

*/

prepend(node) {

}

/**

* Trims the cache to ensure it does not exceed its capacity.

* Removes the least recently used item from the cache if necessary.

*/

trimCache() {

}

}

The

LRUclass represents a Least Recently Used cache, which stores key-value pairs and evicts the least recently used items when the cache exceeds its capacity.The class constructor initializes the cache with a specified capacity and sets up necessary instance variables.

The methods

createNode,update,get,detach,prepend, andtrimCachehandle various operations related to cache management, including node creation, cache updates, item retrieval, node detachment, node prepend, and cache trimming, respectively. However, the implementation details of thesemethods are missing from the provided code.

Now let's write the method in the class:

<p data-line="71" class="sync-line" style="margin:0;"></p>

createNode(key) {

return new Node(key);

}

It returns the newly created node object, allowing it to be added to the cache's linked list structure or used for other operations within the cache implementation.

<p data-line="71" class="sync-line" style="margin:0;"></p>

prepend(node) {

if (!this.head) {

this.head = this.tail = node;

}

node.next = this.head;

this.head.prev = node;

this.head = node;

}

The prepend(node) function sets the provided node as the new head of the linked list, updating pointers accordingly. It ensures efficient insertion at the front of the list, maintaining proper linkage for subsequent operations.

Just like when Vanessa receives new releases or highly requested books, she places them at the front of the library's shelves to showcase them to patrons.

<p data-line="71" class="sync-line" style="margin:0;"></p>

detach(node) {

if (node.next) {

node.next.prev = node.prev;

}

if (node.prev) {

node.prev.next = node.next;

}

if (this.head === node) {

this.head.ne xt = node;

}

if (this.tail === node) {

this.tail.prev = node;

}

node.next = undefined;

node.prev = undefined;

}

The detach(node) helps to remove the node from any position in the list.

When Vanessa notices that certain books are rarely borrowed or outdated, she temporarily removes them from the library's shelves to make space for more popular or relevant books.

<p data-line="71" class="sync-line" style="margin:0;"></p>

get(Key) {

const node = this.look_up.get(Key);

if (!node) {

return undefined;

}

this.detach(node);

this.prepend(node);

return node.value;

}

The get(key) method is retrieving an item from the cache and involves accessing its value based on a specified key. This operation is performed when an application needs to retrieve data from the cache. The accessed item is typically moved to the front of the cache to indicate that it was recently used.

It first checks the map for the node/value. If the node does not exist It returns undefined.

If it exists, the first thing we do is remove it from its current position and then add it to the front of the list.

<p data-line="71" class="sync-line" style="margin:0;"></p>

update(key) {

let node = this.look_up.get(key);

if (!node) {

node = this.createNode(key);

this.length++;

this.prepend(node);

this.trimCache();

this.look_up.set(key, node);

this.reverse_look_up.set(node, key);

} else {

this.detach(value);

this.prepend(value);

}

}

The update(key) method checks if a node associated with the given key exists in the cache; if not, it creates a new node, adds it to the front of the linked list, trims the cache if necessary, and updates the cache's lookup maps. If the node already exists, it detaches it from the list and reattaches it to the front to indicate recent access.

<p data-line="71" class="sync-line" style="margin:0;"></p>

trimCache() {

if (this.length <= this.capacity) {

return;

}

let tail = this.tail;

this.detach(tail);

let key = this.reverse_look_up(tail);

this.look_up.delete(key);

this.reverse_look_up.delete(tail);

this.length--;

}

The trimCache() method checks if the cache length exceeds its capacity; if so, it removes the least recently used item from the cache. It updates the cache's lookup maps and decreases the cache length to maintain the specified capacity limit.

We have finally finished building our LRU Cache. The LRU cache helps access data that are accessed frequently. It removes data that are less accessed frequently. This helps to manage the memory efficiently.

I hope you enjoyed reading it. If you did kindly comment, like, and follow for more technical articles.

The source code for this article is https://github.com/Ibukun-tech/lru_cache_js.